Harnessing the Möbius Loop for a Revolutionary DevOps Process

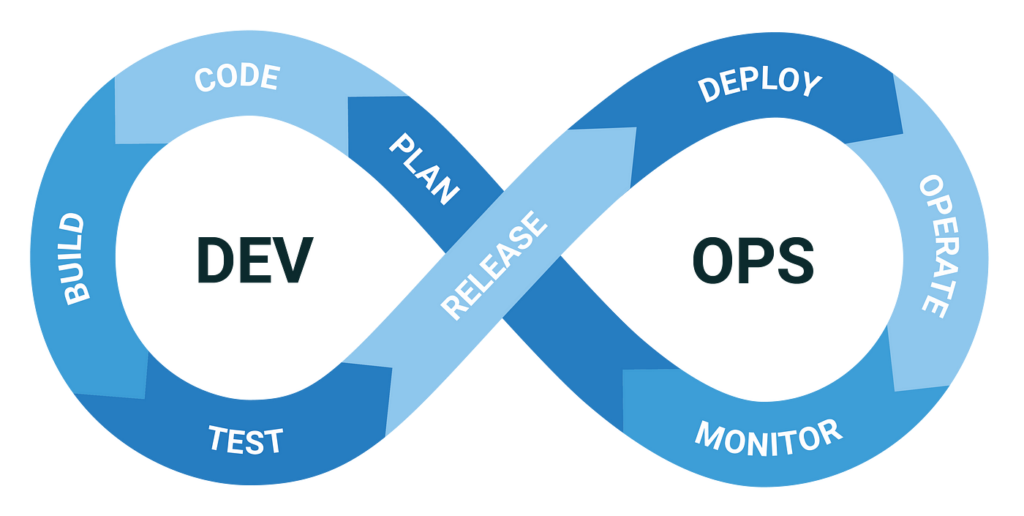

In the world of DevOps, continual improvement and iteration are the name of the game. The Möbius loop, with its one-sided, one-boundary surface, can serve as a vivid metaphor and blueprint for establishing a DevOps process that is both unified and infinitely adaptable. Let’s delve into the Möbius loop concept and see how it beautifully intertwines with the principles of DevOps.

Understanding the Möbius Loop

The Möbius loop or Möbius strip is a remarkable mathematical concept — a surface with only one side and one boundary created through a half-twist of a strip of paper that then has its ends joined. This one-sided surface represents a continuous, never-ending cycle, illustrating an ever-continuous pathway that can epitomize the unceasing cycle of development in DevOps.

Reference: Möbius Strip – Wikipedia

The Möbius Loop and DevOps: A Perfect Harmony

In the ecosystem of DevOps, the Möbius loop signifies a continuous cycle where one phase naturally transitions into the next, establishing a seamless feedback loop that fosters continuous growth and development. This philosophy lies at the heart of DevOps, promoting an environment of collaboration and iterative progress.

Reference: DevOps and Möbius Loop — A Journey to Continuous Improvement

Crafting a Möbius Loop-Foundation DevOps Process

Building a DevOps process based on the Möbius loop principle means initiating a workflow where each development phase fuels the next, constituting a feedback loop that constantly evolves. Here is a step-by-step guide to create this iterative and robust system:

1. Define Objectives

- Business Objectives: Set clear business goals and metrics.

- User Objectives: Align the goals with user expectations.

2. Identify Outcomes

- Expected Outcomes: Envision the desired outcomes for business and users.

- Metrics: Design metrics to measure the effectiveness of strategies.

3. Discovery and Framing

- Research: Invest time in understanding user preferences and pain points.

- Hypothesis: Develop hypotheses to meet business and user objectives.

4. Develop and Deliver

- Build: Employ agile methodologies to build solutions incrementally.

- Deploy: Use CI/CD pipelines for continuous deployment.

Reference: Utilizing Agile Methodologies in DevOps

5. Operate and Observe

- Monitor: Utilize monitoring tools to collect data on system performance.

- Feedback Loop: Establish channels to receive user feedback.

6. Learning and Iteration

- Analyze: Scrutinize data and feedback from the operate and observe phase.

- Learn: Adapt based on the insights acquired and enhance the solution.

7. Feedback and Adjust

- Feedback: Facilitate feedback from all stakeholders.

- Adjust: Revise goals, metrics, or the solution based on the feedback received.

8. Loop Back

- Iterative Process: Reiterate the process, informed by the learning from previous cycles.

- Continuous Improvement: Encourage a mindset of perpetual growth and improvement.

Tools to Embark on Your Möbius Loop Journey

Leveraging advanced tools and technologies is vital to facilitate this Möbius loop-founded DevOps process. Incorporate the following tools to set a strong foundation:

- Version Control: Git for source code management.

- CI/CD: Jenkins, Gitlab, or ArgoCD for automating deployment.

- Containerization and Orchestration: Podman and Kubernetes to handle the orchestration of containers.

- Monitoring and Logging: Tools like Prometheus for real-time monitoring.

- Collaboration Tools: Slack or Rocket.Chat to foster communication and collaboration.

Reference: Top Tools for DevOps

Conclusion

Embracing the Möbius loop in DevOps unveils a path to continuous improvement, aligning with the inherent nature of the development-operations ecosystem. It not only represents a physical manifestation of the infinite loop of innovation but also fosters a system that is robust, adaptable, and user-centric. As you craft your DevOps process rooted in the Möbius loop principle, remember that you are promoting a culture characterized by unending evolution and growth, bringing closer to your objectives with each cycle.

Feel inspired to set your Möbius loop DevOps process in motion? Share your thoughts and experiences in the comments below!