Automating Kubernetes Clusters

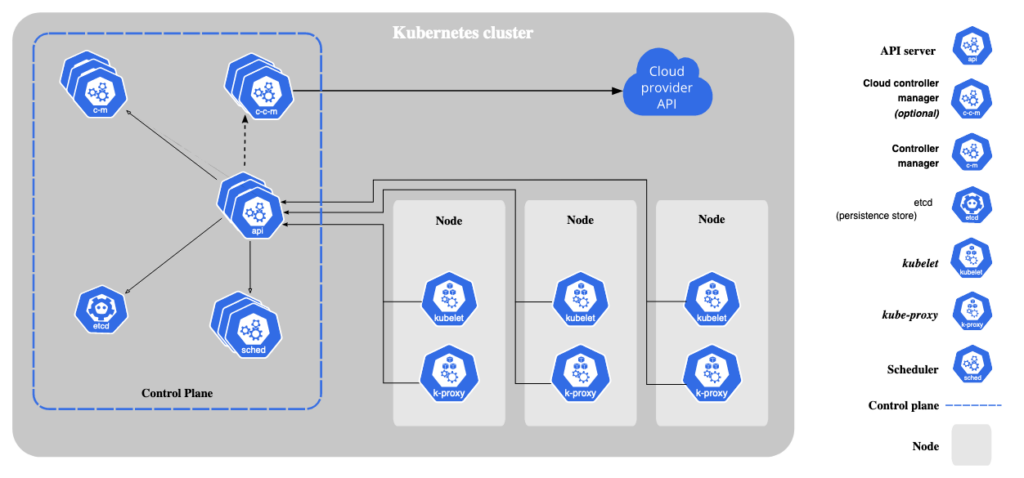

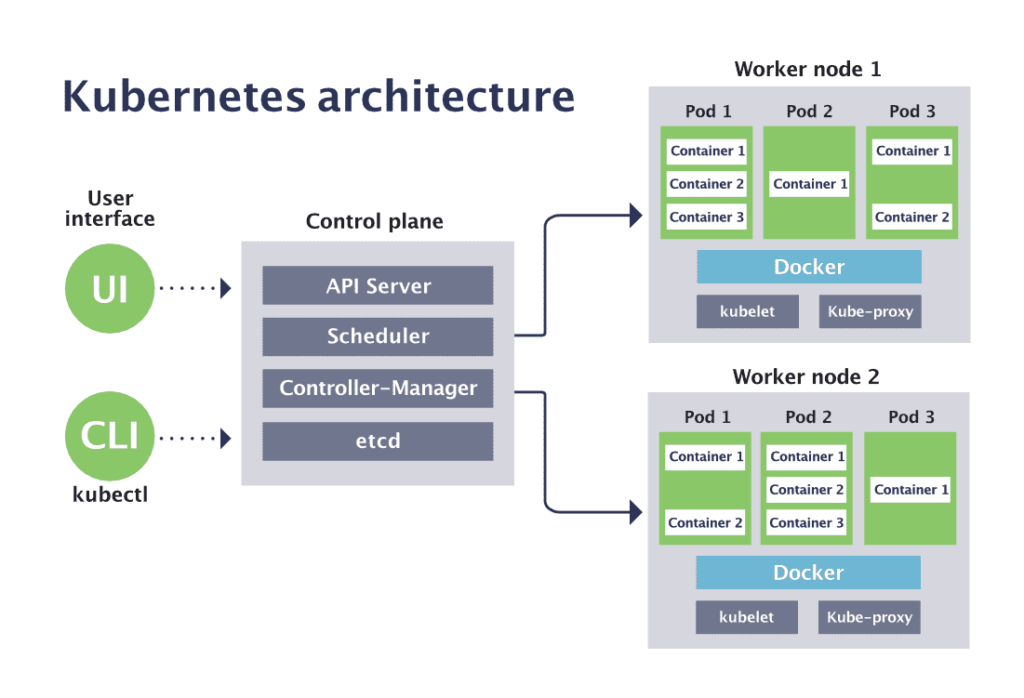

Kubernetes is definitely the de facto standard for container orchestration, powering modern cloud-native applications. As organizations scale their infrastructure, managing Kubernetes clusters efficiently becomes increasingly critical. Manual cluster provisioning can be time-consuming and error-prone, leading to operational inefficiencies. To address these challenges, Kubernetes introduced the Cluster API, an extension that enables the management of Kubernetes clusters through a Kubernetes-native API. In this blog post, we’ll delve into leveraging ClusterClass and the Cluster API to automate the creation of Kubernetes clusters.

Let’s understand ClusterClass

ClusterClass is a Kubernetes Custom Resource Definition (CRD) introduced as part of the Cluster API. It serves as a blueprint for defining the desired state of a Kubernetes cluster. ClusterClass encapsulates various configuration parameters such as node instance types, networking settings, and authentication mechanisms, enabling users to define standardized cluster configurations.

Setting Up Cluster API

Before diving into ClusterClass, it’s essential to set up the Cluster API components within your Kubernetes environment. This typically involves deploying the Cluster API controllers and providers, such as AWS, Azure, or vSphere, depending on your infrastructure provider.

Creating a ClusterClass

Once the Cluster API is set up, defining a ClusterClass involves creating a Custom Resource (CR) using the ClusterClass schema. This example YAML manifest defines a ClusterClass:

apiVersion: cluster.x-k8s.io/v1alpha3

kind: ClusterClass

metadata:

name: my-cluster-class

spec:

infrastructureRef:

kind: InfrastructureCluster

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha3

name: my-infrastructure-cluster

topology:

controlPlane:

count: 1

machine:

type: my-control-plane-machine

workers:

count: 3

machine:

type: my-worker-machine

versions:

kubernetes:

version: 1.22.4In this example:

metadata.namespecifies the name of the ClusterClass.spec.infrastructureRefreferences the InfrastructureCluster CR that defines the underlying infrastructure provider details.spec.topologydescribes the desired cluster topology, including the number and type of control plane and worker nodes.spec.versions.kubernetes.versionspecifies the desired Kubernetes version.

Applying the ClusterClass

Once the ClusterClass is defined, it can be applied to instantiate a Kubernetes cluster. The Cluster API controllers interpret the ClusterClass definition and orchestrate the creation of the cluster accordingly. Applying the ClusterClass typically involves creating an instance of the ClusterClass CR:

kubectl apply -f my-cluster-class.yamlManaging Cluster Lifecycle

The Cluster API facilitates the entire lifecycle management of Kubernetes clusters, including creation, scaling, upgrading, and deletion. Users can modify the ClusterClass definition to adjust cluster configurations dynamically. For example, scaling the cluster can be achieved by updating the spec.topology.workers.count field in the ClusterClass and reapplying the changes.

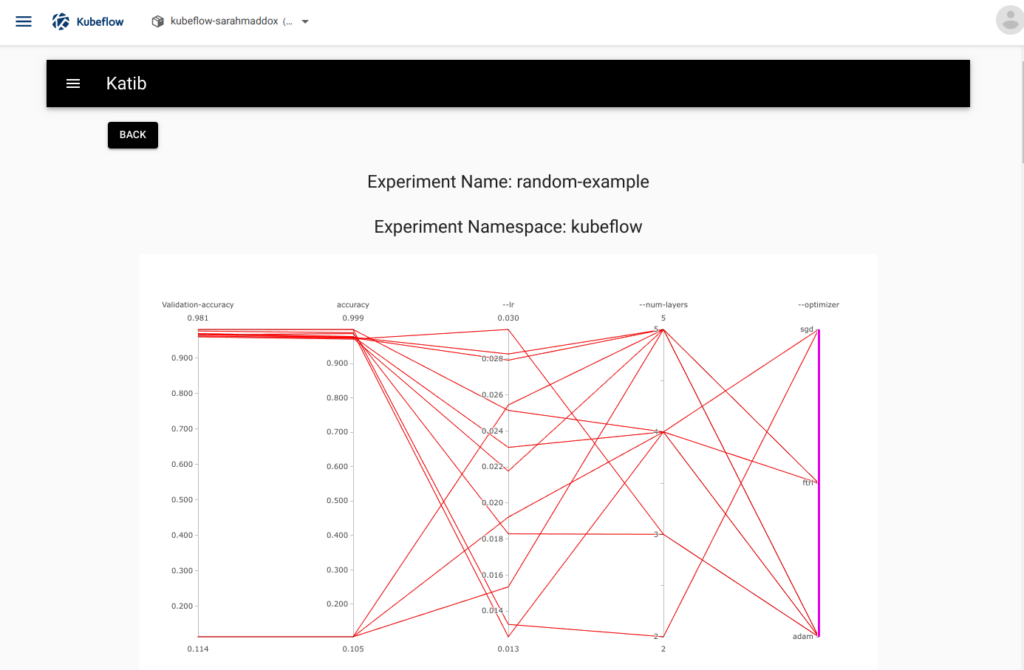

Monitoring and Maintenance

Automation of cluster creation with ClusterClass and the Cluster API streamlines the provisioning process, reduces manual intervention, and enhances reproducibility. However, monitoring and maintenance of clusters remain essential tasks. Utilizing Kubernetes-native monitoring solutions like Prometheus and Grafana can provide insights into cluster health and performance metrics.

Wrapping it up

Automating Kubernetes cluster creation using ClusterClass and the Cluster API simplifies the management of infrastructure at scale. By defining cluster configurations as code and leveraging Kubernetes-native APIs, organizations can achieve consistency, reliability, and efficiency in their Kubernetes deployments. Embracing these practices empowers teams to focus more on application development and innovation, accelerating the journey towards cloud-native excellence.