AI Workloads for Kubernetes

Introduction

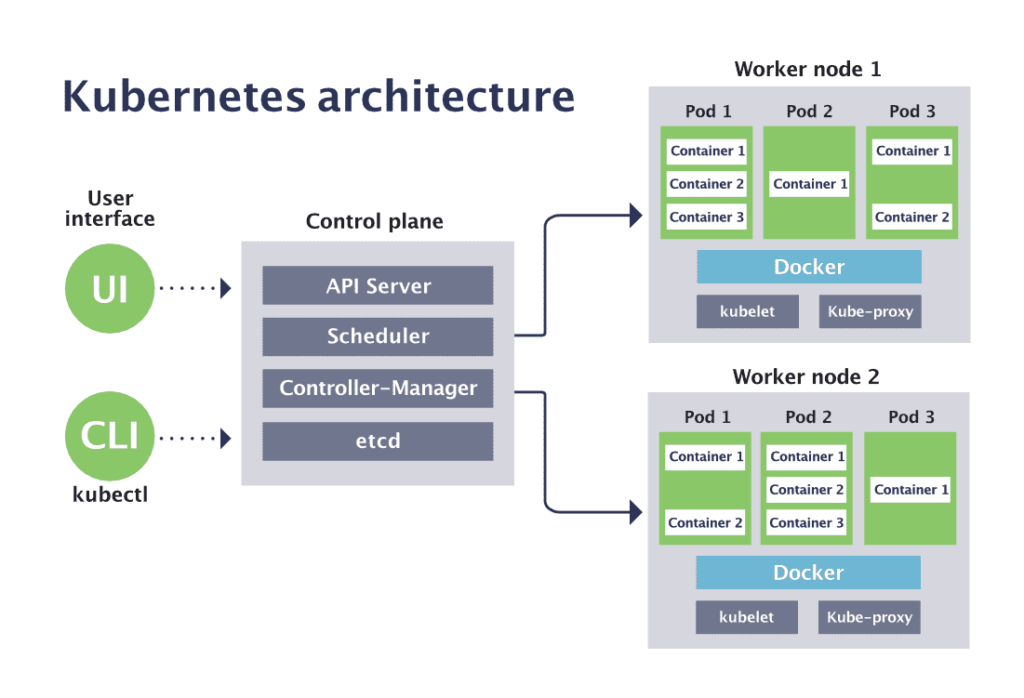

In recent years, Kubernetes has emerged as the go-to solution for orchestrating containerized applications at scale. But when it comes to deploying AI workloads, does it offer the same level of efficiency and convenience? In this blog post, we delve into the types of AI workloads that are best suited for Kubernetes, and why you should consider it for your next AI project.

Model Training and Development

Batch Processing

When working with large datasets, batch processing becomes a necessity. Kubernetes can efficiently manage batch processing tasks, leveraging its abilities to orchestrate and scale workloads dynamically.

- Example: A machine learning pipeline that processes terabytes of data overnight, utilizing idle resources to the fullest.

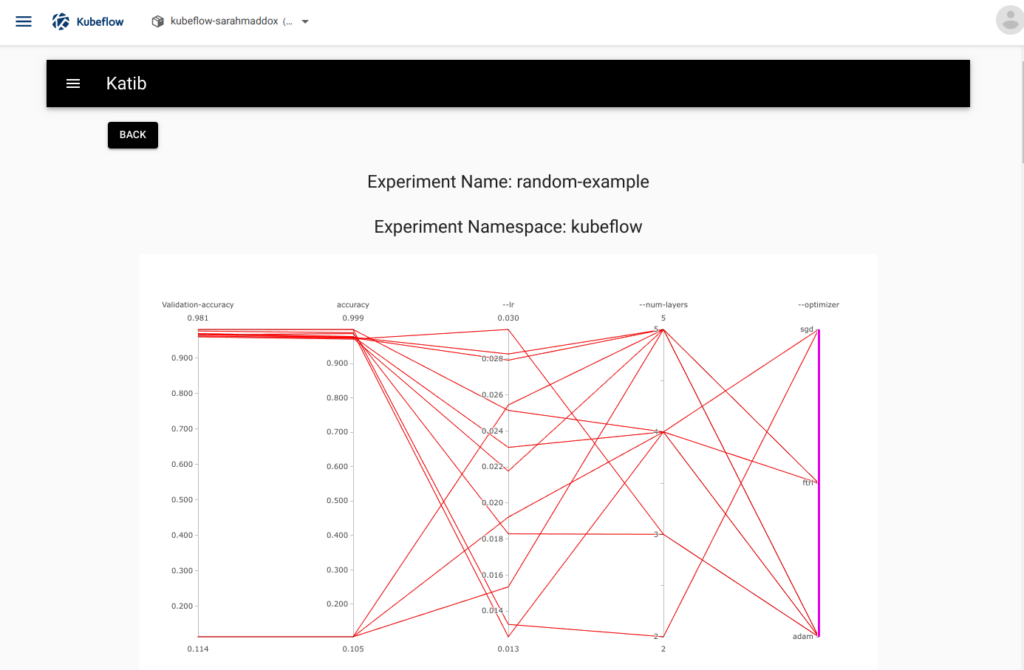

Hyperparameter Tuning

Hyperparameter tuning involves running numerous training jobs with different parameters to find the optimal configuration. Kubernetes can streamline this process by managing multiple parallel jobs effortlessly.

- Example: An AI application that automatically tunes hyperparameters over a grid of values, reducing the time required to find the best model.

Model Deployment

Rolling Updates and Rollbacks

Deploying AI models into production environments requires a system that supports rolling updates and rollbacks. Kubernetes excels in this area, helping teams to maintain high availability even during updates.

- Example: A recommendation system that undergoes frequent updates without experiencing downtime, ensuring a seamless user experience.

Auto-Scaling

AI applications often face variable traffic, requiring a system that can automatically scale resources. Kubernetes’ auto-scaling feature ensures that your application can handle spikes in usage without manual intervention.

- Example: A voice recognition service that scales up during peak hours, accommodating a large number of simultaneous users without compromising on performance.

Data Engineering

Data Pipeline Orchestration

Managing data pipelines efficiently is critical in AI projects. Kubernetes can orchestrate complex data pipelines, ensuring that each component interacts seamlessly.

- Example: A data ingestion pipeline that collects, processes, and stores data from various sources, running smoothly with the help of Kubernetes orchestration.

Stream Processing

For real-time AI applications, stream processing is a crucial component. Kubernetes facilitates the deployment and management of stream processing workloads, ensuring high availability and fault tolerance.

- Example: A fraud detection system that analyzes transactions in real-time, leveraging Kubernetes to maintain a steady flow of data processing.

Conclusion

Kubernetes offers a robust solution for deploying and managing AI workloads at scale. Its features like auto-scaling, rolling updates, and efficient batch processing make it an excellent choice for AI practitioners aiming to streamline their operations and bring their solutions to market swiftly and efficiently.

Whether you are working on model training, deployment, or data engineering, Kubernetes provides the tools to orchestrate your workloads effectively, saving time and reducing complexity.

To get started with Kubernetes for your AI projects, consider exploring the rich ecosystem of tools and communities available to support you on your journey.