Recent Articles

-

OpenTelemetry Achieves GA Status as 89% of Organizations Consolidate Observability Stacks

The observability landscape has reached a pivotal moment. OpenTelemetry, the Cloud Native Computing Foundation’s flagship observability project, has achieved General Availability status, coinciding with a…

-

Implementing CloudFlare Tunnel for Secure Home Lab Access: A Complete Technical Guide

Executive Summary What is CloudFlare Tunnel and why is it essential for secure home lab access? CloudFlare Tunnel (formerly Argo Tunnel) is a secure tunneling…

-

GitOps Goes Mainstream: ArgoCD Surpasses 20,000 GitHub Stars as Enterprise Adoption Triples

The DevOps landscape witnessed a seismic shift in 2024 as GitOps evolved from an emerging practice to mainstream enterprise standard. With ArgoCD crossing the milestone…

-

Kubernetes Cost Optimization Matures: Leading Companies Cut Cloud Spending by 35% Using FinOps

As Kubernetes adoption soars to 96% among cloud-native organizations, a critical challenge has emerged: managing the astronomical costs of container orchestration at scale. With the…

-

Policy as Code Reaches 71% Enterprise Adoption as DevSecOps Shifts Left Successfully

The DevSecOps landscape has undergone a dramatic transformation in 2024, moving far beyond the early days of basic security scanning to embrace comprehensive policy automation.…

-

A Guide to MCP for Kubernetes Management

As enterprises increasingly adopt Kubernetes for container orchestration, the complexity of managing distributed systems continues to grow. Enter the Model Context Protocol (MCP) – a…

-

How Platform Engineering Reduced Developer Cognitive Load by 40% – Spotify’s Backstage Journey

Introduction In 2024, Platform Engineering emerged as one of the most critical disciplines in software development, fundamentally changing how organizations approach developer productivity and infrastructure…

-

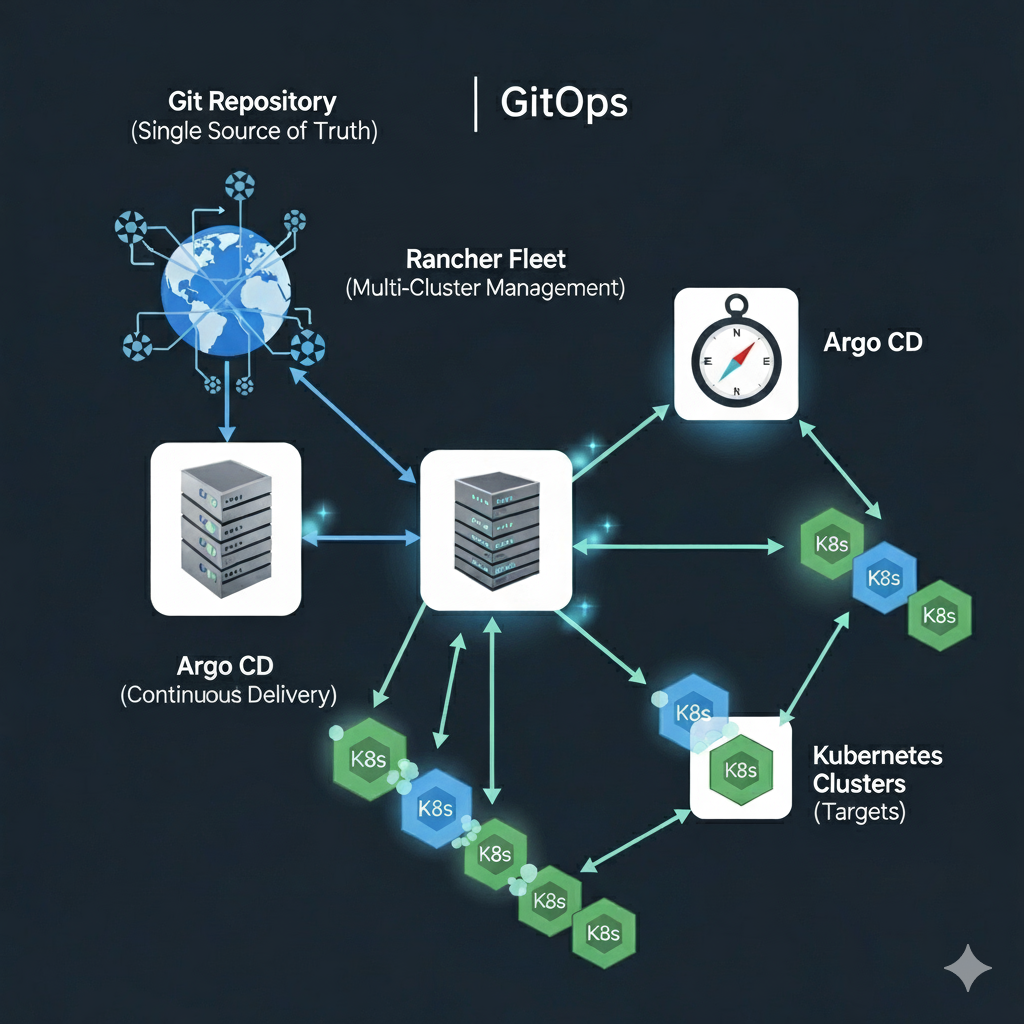

How do I: Manage Infrastructure at Scale with Rancher Fleet

Part 3: Scaling GitOps with Rancher Fleet – Managing Infrastructure at Scale Recap of Part 1 In the second part of this series, we built…

-

How do I: Build a GitOps Pipeline in Argo

Part 2: Building a GitOps Pipeline with Rancher Fleet and Argo – From Code to Deployment Recap of Part 1 In the first part of…

-

Mastering Harbor and ArgoCD Integration: A Complete Guide for Enterprise DevOps

Learn how to integrate Harbor container registry with ArgoCD for secure, scalable GitOps workflows. Complete guide covering setup, security best practices, common challenges, and enterprise…

-

Enterprise GitOps with ArgoCD and Harbor on RKE2: Complete Integration Guide

Introduction: Building the Complete GitOps Stack In Part 1 of this series, we established a robust Harbor container registry on RKE2 using SUSE Application Collection.…

-

Enterprise Harbor Registry on RKE2 with SUSE Application Collection: Complete Setup Guide

Complete guide to deploying Harbor container registry on RKE2 using SUSE Application Collection. Step-by-step instructions for enterprise-grade container management with security, monitoring, and high availability.