Platform Engineer

-

OpenTelemetry Achieves GA Status as 89% of Organizations Consolidate Observability Stacks

The observability landscape has reached a pivotal moment. OpenTelemetry, the Cloud Native Computing Foundation’s flagship observability project, has achieved General Availability status,…

-

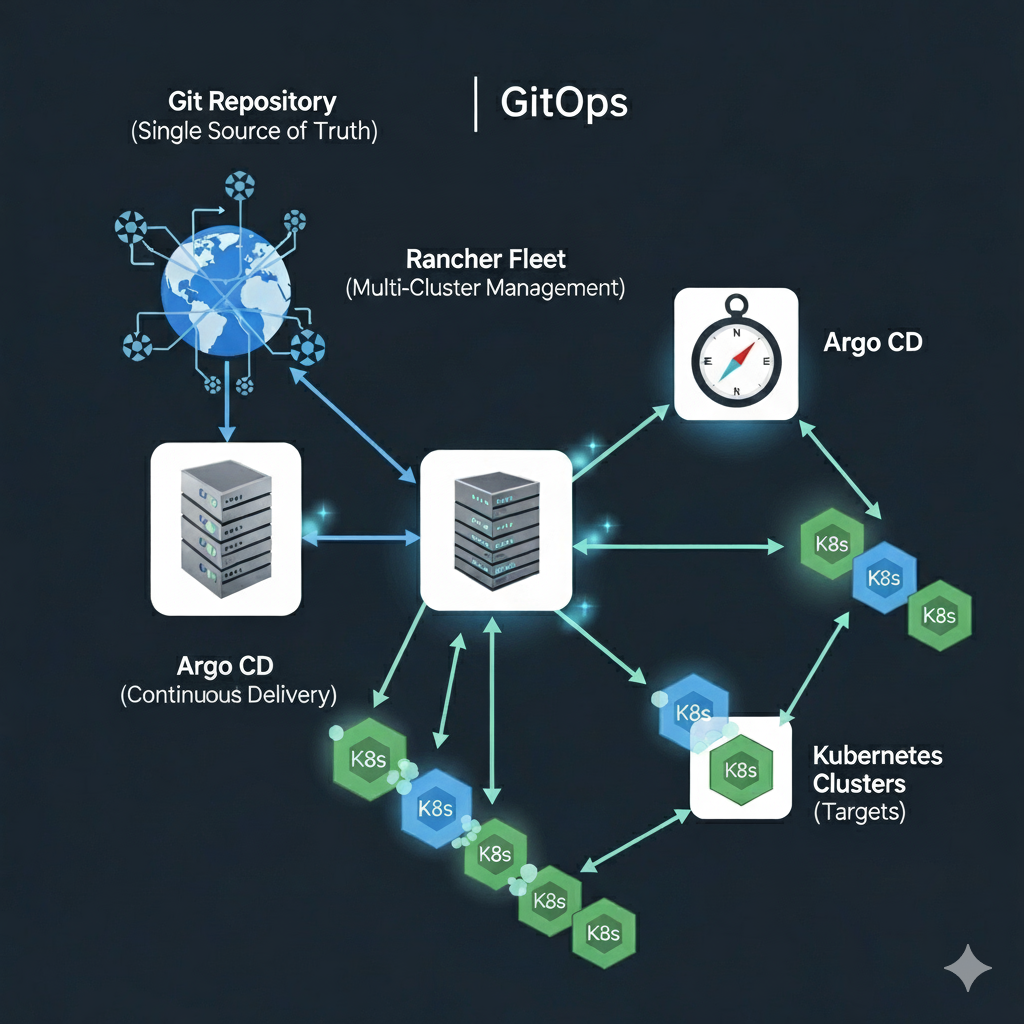

How do I: Manage Infrastructure at Scale with Rancher Fleet

Part 3: Scaling GitOps with Rancher Fleet – Managing Infrastructure at Scale Recap of Part 1 In the second part of this…

-

How do I: Build a GitOps Pipeline in Argo

Part 2: Building a GitOps Pipeline with Rancher Fleet and Argo – From Code to Deployment Recap of Part 1 In the…

-

Mastering Harbor and ArgoCD Integration: A Complete Guide for Enterprise DevOps

Learn how to integrate Harbor container registry with ArgoCD for secure, scalable GitOps workflows. Complete guide covering setup, security best practices, common…

-

Enterprise GitOps with ArgoCD and Harbor on RKE2: Complete Integration Guide

Introduction: Building the Complete GitOps Stack In Part 1 of this series, we established a robust Harbor container registry on RKE2 using…

Search

Latest Posts

Latest Comments

No comments to show.